Basics

A programming model for large-scale distributed data processing

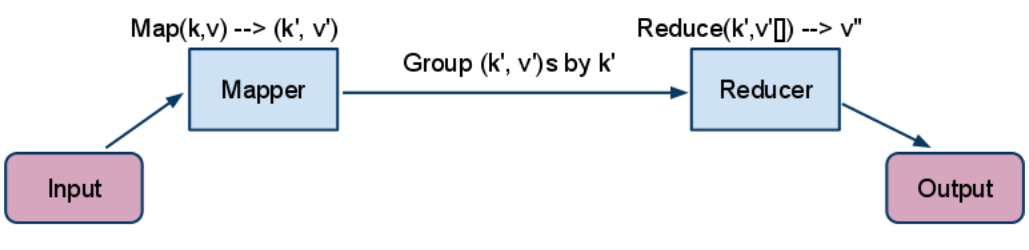

Hadoop MapReduce jobs are divided into a set of map tasks and reduce tasks that run in a distributed fashion on a cluster of computers. Each task works on the small subset of the data it has been assigned so that the load is spread across the cluster.

Basic Functionality

Why different keys ? Do we really need two keys ?

At first it doesn't seem that we need different keys. but have a closer look at API its very obvious.

Example : How to find max temp of a year using MapReduce ?

Ans : use following map reduce,

1) The map function merely extracts the year and the air temperature (indicated in bold text), and emits them as its output

(1950, 0)

(1950, 22)

(1950, −11)

(1949, 111)

(1949, 78)

2) This processing sorts and groups the key-value pairs by key. So, continuing the example, our reduce function sees the following input:

(1949, [111, 78])

(1950, [0, 22, −11])

All the reduce function has to do now is iterate through the list and pick up the maximum reading.

.https://hadoop.apache.org/docs/r2.7.2/api/index.html?org/apache/hadoop/mapreduce/Mapper.html

The input types of the reduce function must match the output types of the map function:

Job job = new Job();

job.setJarByClass(MaxTemperature.class);

job.setJobName("Max temperature");

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(MaxTemperatureMapper.class);

job.setReducerClass(MaxTemperatureReducer.class);

job.setOutputKeyClass(Text.class); // for reducer ... setMapOutputKeyClass()

job.setOutputValueClass(IntWritable.class); // for reducer ... setMapOutputValueClass()

System.exit(job.waitForCompletion(true) ? 0 : 1);

Note

Hadoop divides the input to a MapReduce job into fixed-size pieces called input splits, or just splits. Hadoop creates one map task for each split, which runs the user-defined map function for each record in the split.

Map tasks write their output to the local disk, not to HDFS. Why is this? Map output is intermediate output: it’s processed by reduce tasks to produce the final output, and once the job is complete, the map output can be thrown away. So, storing it in HDFS with replication would be overkill. If the node running the map task fails before the map output has been consumed by the reduce task, then Hadoop will automatically rerun the map task on another node to re-create the map output.

Reduce tasks don’t have the advantage of data locality. The sorted map outputs have to be transferred across the network to the node where the reduce task is running, where they are merged and then passed to the user-defined reduce function. The output of the reduce is normally stored in HDFS for reliability.For each HDFS block of the reduce output, the first replica is stored on the local node, with other replicas being stored on off-rack nodes for reliability.

Dotted arrows show data transfer on same machine.

Solid arrow show data transfer across machines.

Combiner

Its a reducer on the mapper , so that data transfer across nodes is minimized.

The combiner function doesn’t replace the reduce function. (How could it? The reduce function is still needed to process records with the same key from different maps.)

job.setMapperClass(MaxTemperatureMapper.class);

job.setCombinerClass(MaxTemperatureReducer.class);

job.setReducerClass(MaxTemperatureReducer.class);

Hadoop Streaming

Hadoop provides an API to MapReduce that allows you to write your map and reduce functions in languages other than Java. Hadoop Streaming uses Unix standard streams as the interface between Hadoop and your program, so you can use any language that can read standard input and write to standard output to write your MapReduce program.

A programming model for large-scale distributed data processing

- Simple, elegant concept

- Restricted, yet powerful programming construct

- Building block for other parallel programming tools

- Extensible for different applications

Hadoop MapReduce jobs are divided into a set of map tasks and reduce tasks that run in a distributed fashion on a cluster of computers. Each task works on the small subset of the data it has been assigned so that the load is spread across the cluster.

- The map tasks generally load, parse, transform, and filter data.

- Each reduce task is responsible for handling a subset of the map task output.

Basic Functionality

Why different keys ? Do we really need two keys ?

At first it doesn't seem that we need different keys. but have a closer look at API its very obvious.

Example : How to find max temp of a year using MapReduce ?

Ans : use following map reduce,

1) The map function merely extracts the year and the air temperature (indicated in bold text), and emits them as its output

(1950, 0)

(1950, 22)

(1950, −11)

(1949, 111)

(1949, 78)

2) This processing sorts and groups the key-value pairs by key. So, continuing the example, our reduce function sees the following input:

(1949, [111, 78])

(1950, [0, 22, −11])

All the reduce function has to do now is iterate through the list and pick up the maximum reading.

.https://hadoop.apache.org/docs/r2.7.2/api/index.html?org/apache/hadoop/mapreduce/Mapper.html

- org.apache.hadoop.mapreduce.Mapper

- it has just 4 methods

- clean(context) setup(context) map(keyIn, valueIn, context) & run(context)

- first calls setup(Context),

- followed by map(Object, Object, Context) for each key/value pair in the InputSplit.

- Finally cleanup(Context) is called.

The input types of the reduce function must match the output types of the map function:

Job job = new Job();

job.setJarByClass(MaxTemperature.class);

job.setJobName("Max temperature");

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.setMapperClass(MaxTemperatureMapper.class);

job.setReducerClass(MaxTemperatureReducer.class);

job.setOutputKeyClass(Text.class); // for reducer ... setMapOutputKeyClass()

job.setOutputValueClass(IntWritable.class); // for reducer ... setMapOutputValueClass()

System.exit(job.waitForCompletion(true) ? 0 : 1);

Note

- First all map taks is done , then all reducer

- For example, we can see that the job was given an ID of job_local26392882_0001, and it ran one map task and one reduce task (with the following IDs: attempt_local26392882_0001_m_000000_0 and attempt_local26392882_0001_r_000000_0). Knowing the job and task IDs can be very useful when debugging MapReduce jobs.

Hadoop divides the input to a MapReduce job into fixed-size pieces called input splits, or just splits. Hadoop creates one map task for each split, which runs the user-defined map function for each record in the split.

Map tasks write their output to the local disk, not to HDFS. Why is this? Map output is intermediate output: it’s processed by reduce tasks to produce the final output, and once the job is complete, the map output can be thrown away. So, storing it in HDFS with replication would be overkill. If the node running the map task fails before the map output has been consumed by the reduce task, then Hadoop will automatically rerun the map task on another node to re-create the map output.

Reduce tasks don’t have the advantage of data locality. The sorted map outputs have to be transferred across the network to the node where the reduce task is running, where they are merged and then passed to the user-defined reduce function. The output of the reduce is normally stored in HDFS for reliability.For each HDFS block of the reduce output, the first replica is stored on the local node, with other replicas being stored on off-rack nodes for reliability.

Dotted arrows show data transfer on same machine.

Solid arrow show data transfer across machines.

Combiner

Its a reducer on the mapper , so that data transfer across nodes is minimized.

The combiner function doesn’t replace the reduce function. (How could it? The reduce function is still needed to process records with the same key from different maps.)

job.setMapperClass(MaxTemperatureMapper.class);

job.setCombinerClass(MaxTemperatureReducer.class);

job.setReducerClass(MaxTemperatureReducer.class);

Hadoop Streaming

Hadoop provides an API to MapReduce that allows you to write your map and reduce functions in languages other than Java. Hadoop Streaming uses Unix standard streams as the interface between Hadoop and your program, so you can use any language that can read standard input and write to standard output to write your MapReduce program.

- Ruby

- Python

No comments:

Post a Comment